Can't switch back to primary after server reboot.

We got server reboot and after that redundancy can not switch back to primary server.

Somehow backup does not see it as ready .

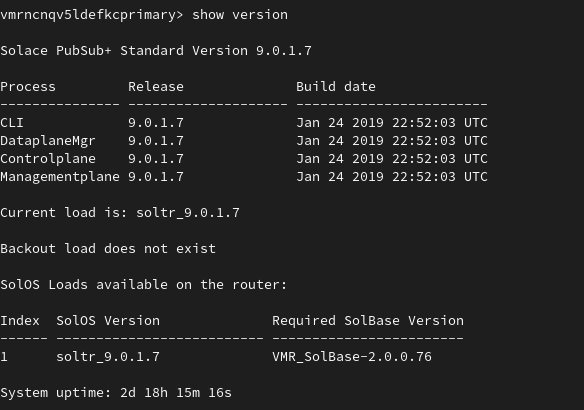

on primary

No issues on network level.

Comments

-

Haha - that's great to hear that it's been humming away all this time!

It looks like your Primary isn't ready to take back over as it's reporting "Local Inactive". If you do a `show redundancy detail` see if you can find more information.

I see this in the docs:

More info here: https://docs.solace.com/Features/HA-Redundancy/Monitoring-Appliance-Redundancy.htm

Hope that helps!

0 -

What information is given when you run "show config-sync"

If it shows "out of sync" you may need to run "assert-master" or "assert-leader" depending on the version of your broker.

Given that it's been unmanaged for years I assume it is the former, so if "out of sync" try something like this...

ip-172-31-7-xxx# admin

ip-172-31-7-xxx(admin)# config-sync

ip-172-31-7-xxx(admin/config-sync)# assert-master router

ip-172-31-7-xxx(admin/config-sync)# assert-master message-vpn <your vpn>

ip-172-31-7-xxx(admin/config-sync)# show config-sync

0 -

Here are details:

vmrncnqv5ldefkcprimary> show redundancy detail Configuration Status : Enabled Redundancy Status : Down Last Failure Reason : One or more nodes is offline Last Failure Time : Mar 27 2023 13:47:01 UTC Operating Mode : Message Routing Node Switchover Mechanism : Hostlist Auto Revert : No Redundancy Mode : Active/Standby Active-Standby Role : Primary Mate Router Name : vmrncnqv5ldefkcbackup SMF plain-text Port : SMF compressed Port : SMF SSL Port : Mate-Link Connect Via : vmrncnqv5ldefkc1:8741 ADB Link To Mate : Up Last Failure Reason : Mate Link Restart Last Failure Time : Mar 24 2023 19:32:16 UTC ADB Hello To Mate : Up Last Failure Reason : N/A Last Failure Time : Hello Interval (ms) : 1000 Hello Timeout (ms) : 3000 Avg Hello Latency (ms) : 1 Max Hello Latency (ms) : 224 Primary Virtual Router Backup Virtual Router ---------------------- ---------------------- Activity Status Local Inactive Shutdown Routing Interface intf0:1 intf0:1 Routing Interface Status Up VRRP Status Initialize VRRP Priority -1 Message Spool Status AD-Standby Priority Reported By Mate: Active ADB Hello Protocol Active VRRP None (-1) Activity Status: Mate Active Operational Status Not Ready Redundancy Config Status Enabled Message Spool Status Ready SMRP Status Ready Db Build Status Ready Db Sync Status Ready Internal Priority None Internal Activity Status Mate Active Internal Redundancy State Pri-NotReady Message Spool Status: Ready Message Spool Config Status Enabled VRID Config Status Ready ADB Status Ready Flash Module Status Ready Power Module Status Ready ADB Contents Ready Local Contents Key 224.251.75.43:49,122 Mate Contents Key 224.251.75.43:49,122 Schema Match Yes Disk Status Ready Disk Contents Ready Disk Key (Primary) 224.251.75.43:49,122 Disk Key (Backup) 224.251.75.43:49,122 ADB Datapath Status Ready Internal Redundancy State AD-Standby Lock Owner N/A VRID Config Parameter Local Configuration Received From Mate ----------------------------- ---------------------- ---------------------- Primary VRID 224.251.75.43 224.251.75.43 Backup VRID AD-Enabled VRID 224.251.75.43 224.251.75.43 Disk WWN: Local: N/A Received From Mate: N/A * - indicates configuration mismatch between local and mate router

0 -

yes. I can see traffic by tcpdump.

Can telnet to any kind of ports between each other.

Checked logs everywhere.

Had no chance to find why primary set as offline all nodes in group.

I can reach those from primary to mate port 8741.

Same I see traffic on those nodes from primary on config sync port 8300

0 -

Found what was that.

In system logs found complains from consul

2023-03-28T11:48:58.460+00:00 <local5.warning> vmrncnqv5ldefkc1 consul[2091]: consul: error getting server health from "vmrncnqv5ldefkcprimary": context deadline exceeded

2023-03-28T11:48:59.460+00:00 <local5.warning> vmrncnqv5ldefkc1 consul[2091]: consul: error getting server health from "vmrncnqv5ldefkcprimary": rpc error getting client: failed to get conn: dial tcp 10.60.25.6:0->127.0.0.1:8300: getsockopt: connection refused

2023-03-28T11:49:00.460+00:00 <local5.warning> vmrncnqv5ldefkc1 consul[2091]: consul: error getting server health from "vmrncnqv5ldefkcprimary": context deadline exceeded

2023-03-28T11:49:01.458+00:00 <local5.warning> vmrncnqv5ldefkc1 consul[2091]: consul: error getting server health from "vmrncnqv5ldefkcprimary": rpc error getting client: failed to get conn: dial tcp 10.60.25.6:0->127.0.0.1:8300: getsockopt: connection refused

Then looked into consul agent config on both.

/var/lib/solace/consul.json

On primary somehow there is wrong option. On backup no such at all.

"advertise_addr": "127.0.0.1",

Do not know from where it is coming. Sure that will cause for consul to get it's cluster up as it advertise 127.0.0.1 instead primary node IP.

So I've removed that and killed agent. It started again automatically and consul cluster came up.

And then solace primary node become fully OK and redundancy switched to it.

Now I know why it broke after reboot :)

Someone years back already fixed that case temporary until it gone after reboot.

0 -

Forgot to mention that those are running in docker.

So once I've restart docker that config going back with "advertise_addr": "127.0.0.1".

I assume that is part of product.

Did not found so far how I can set that in right way.

So more likely will do some dummy cron to check that config, remove bad option if there and then restart consul.

0 -

@progman just checking back in on this thread. Noticed you already provided the SolOS version on reply #6 above. So ignore my last comment.

Did you try upgrading the broker? 9.0.1 is a pretty old version. You might have to upgrade to an intermediate version before going to the latest (10.3.1). If you ran into this problem on a newer broker, I'm sure our engineering team would want to take a look at your issue. 9.0.1 being so old, I don't think we'll get much help.

0