how to publish persistent message to a topic, which can be consumed by multiple consumers same time

Myself Owais,

I have been working on a project, where I am implementing solace pub sub api service, but I am facing a problem ,

the problem is "Let's say, there are 100 consumer in my organisation, and I want to publish a message to a topic , but here only 50 people are subscribed and once I publish a message using queue only one consumer is recieving it, and then message get vanished from the queue, how we can make a configuration such that until all 100 consumers do not get the message it will stay in the queue, even though all 100 consumer are not connected at the moment, but they will get connected in the future , so till then message stays in the queue "

and our consumer number can go in millions , is there any way to implement this successfully

thank you

Best Answers

-

Hi there @owais, welcome to the Community. Into the millions, you say?? Wow that's a lot! Like: publish one message and you expect a million subscribers to each get a copy of the message, and in a Guaranteed/persistent fashion (in case they are offline).

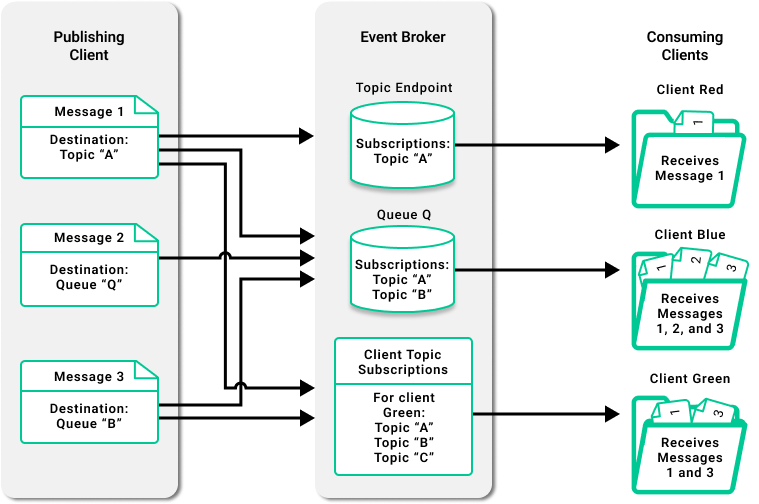

Your testing is correct, a message in a queue can only be sent to one consumer, even if there are many consumers bound to the queue. If you need to do the publish-subscribe (one-to-many) pattern in Solace with Guaranteed delivery, then every consumer needs their own queue, and each queue is subscribed to a topic (e.g.

banking/notification/>) and whenever you publish on a topic that matches, each queue will get a copy. At least in Solace, we only write the message to the disk once, so large fanout doesn't take up a lot of room on the broker. But if you are talking about millions of consumers, this is probably not easy, would require a networked mesh (DMR) of brokers, and very large ones.Is this an IOT use case? Why such large scale? And what type of data? Depending on the use case, there may be other approaches to use. For example, if a consumer is offline for a while, do they want to receive all messages they missed while offline, or only the most recent one? How many different topics are we talking about here?

1 -

Commenting to follow, sounds like an interesting use-case 😊

If there is some common data that needs to be made available to N consumers, where N is large and not known up front, using the Replay feature can offer a nice option too.

The Replay queue (up to a fixed size) is there in the broker listening to all messages and holding a reference. Whenever the transient consumer comes along, it can join an available queue, set/update any topic subscriptions, then trigger replay of all messages that match. (The queue will fill up instantly and start delivering to the connected consumer.)

After the application has consumed everything it can then 'release' the queue and go away. Next time it returns to consume messages, it will be the same workflow to start consuming again - with the slight modification of specifying the RMID of the last message it consumed - so that the replay can begin from that point onwards and have no duplicate processing of messages.

All depends on the use-case and whether this would fit of course, so please do share more info for us!

3

Answers

-

Hi there @owais, welcome to the Community. Into the millions, you say?? Wow that's a lot! Like: publish one message and you expect a million subscribers to each get a copy of the message, and in a Guaranteed/persistent fashion (in case they are offline).

Your testing is correct, a message in a queue can only be sent to one consumer, even if there are many consumers bound to the queue. If you need to do the publish-subscribe (one-to-many) pattern in Solace with Guaranteed delivery, then every consumer needs their own queue, and each queue is subscribed to a topic (e.g.

banking/notification/>) and whenever you publish on a topic that matches, each queue will get a copy. At least in Solace, we only write the message to the disk once, so large fanout doesn't take up a lot of room on the broker. But if you are talking about millions of consumers, this is probably not easy, would require a networked mesh (DMR) of brokers, and very large ones.Is this an IOT use case? Why such large scale? And what type of data? Depending on the use case, there may be other approaches to use. For example, if a consumer is offline for a while, do they want to receive all messages they missed while offline, or only the most recent one? How many different topics are we talking about here?

1 -

Commenting to follow, sounds like an interesting use-case 😊

If there is some common data that needs to be made available to N consumers, where N is large and not known up front, using the Replay feature can offer a nice option too.

The Replay queue (up to a fixed size) is there in the broker listening to all messages and holding a reference. Whenever the transient consumer comes along, it can join an available queue, set/update any topic subscriptions, then trigger replay of all messages that match. (The queue will fill up instantly and start delivering to the connected consumer.)

After the application has consumed everything it can then 'release' the queue and go away. Next time it returns to consume messages, it will be the same workflow to start consuming again - with the slight modification of specifying the RMID of the last message it consumed - so that the replay can begin from that point onwards and have no duplicate processing of messages.

All depends on the use-case and whether this would fit of course, so please do share more info for us!

3 -

Nice @JamilAhmed , I like this idea! Use the replay queue for any number of consumers that come and go. They create a tempQ when they connect, and just request everything from the last RGMID they'd seen.

Next question: who/where/what stores that RGMID? Dumb applications might not want to have to manage that themselves. Now we're into the "Zookeeper keeping track of offsets" issue that Kafka has/had. An LVQ per consumer is too heavy. PS+Cache could be used, but is not Guaranteed.

0 -

Little late , yet still if it works, here is an approach.

Although ,I can see a good explaination here https://docs.solace.com/Messaging/Guaranteed-Msg/Endpoints.htm#Queue_Access_Types

Below is an approach :Publish to a topic / topicEndpoint

Consume through Queue / topic SubscriptionExample, lets say that a service publishes to a

Topic Endpoint Name = service1-topic-endpoint

Topic = singapore/management/created/version1Create as many consumers as required which uses topic Subscription

Example, lets say we have below queues with topic SubscriptionSINGAPORE.SOME_APPLICATION1.FLOW.VERSION1 with topic subscription as singapore/management/created/version1

SINGAPORE.SOME_APPLICATION2.FLOW.VERSION1 with topic subscription as singapore/management/created/version1

SINGAPORE.SOME_APPLICATION3.FLOW.VERSION1 with topic subscription as singapore/management/created/version1

....and so on

Define, the maxTTL as per your need , each consumer shall be able to receive same msg , untill consumed

Pros: easy to implementCons: Single topic point of failure

Topic segregation would be better approach from my perspective, as topic hierarchy can help in taking flow load as per defined hierarchy. example, if singapore/management/«MANAGEMENT_TYPE»created/version1

MANAGEMENT_TYPE can be something from api calls. naming convention is only for illustration purpose.0