Default vs Compressed Connection

Hello everyone!

While using the solace pubsub+ broker on docker as a localhost, I noticed a very strange observation.

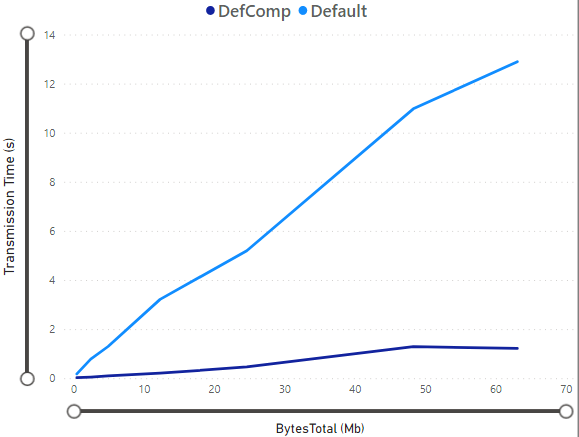

The default connection performs better than the compressed connection:

Whereas, while using the cloud broker, the observation is opposite:

Since the compressed connection compresses messages before sending, performance with compression should be better, as seen in case of cloud broker.

Can anyone help me understand the reason for the observations on localhost?

Thanks in advance!

Comments

-

Hi,

This is expected behaviour of applying compression, I'd suspect you would get similar results by enabling compression support on ngnix or another web server.

If you think about it - compression comes at the cost of processing time to compress/decompress messages.

The benefits of using compression comes into play when your setup is constrainted on the networking aspects in terms of latency and/or bandwidth.

In this case compression means you need to send less packets over a relatively slow connection (e.g. your laptop to Solace cloud broker). The geographic distance between your laptop and the cloud instance alone will add milliseconds - probably > 10ms - to the latency.

This means the time spent on compression/decompression is made up by the time it takes to transmit the reduced amount of data over the network.Your localhost setup is not network constrained - what you measure in the first graph is the overhead of applying compression and the take away from these two graphs is that it only makes sense to enable compression if your constraints are network related.

4 -

All good points @swenhelge! With docker, if you are running your client on the same host locally, the processing load of compressing at the client & uncompressing by broker is done on the same host. With the cloud broker, I assume your client is running elsewhere and the overhead is now split between these hots.

2